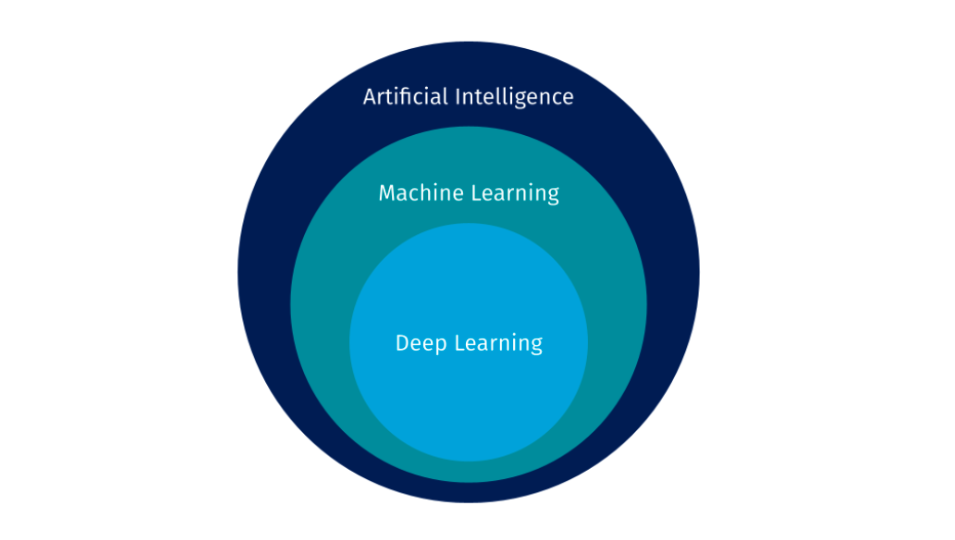

In the realm of modern technology, machine learning stands as a cornerstone, revolutionizing industries, transforming businesses, and shaping our everyday lives. At its core, machine learning represents a subset of artificial intelligence (AI) that empowers systems to learn from data iteratively, uncover patterns, and make predictions or decisions with minimal human intervention. It is important to demystify machine learning since it’s an invitation to explore the transformative potential of AI in your life.

In a world where technology increasingly shapes our experiences and decisions, understanding machine learning opens doors to unprecedented opportunities. From personalized recommendations that enhance your shopping experience to predictive models that optimize supply chains and improve healthcare outcomes, AI is revolutionizing industries and revolutionizing how we interact with the world around us.

This article explores the essence of machine learning, its fundamental concepts, and real-world applications across diverse industries, as well as its limitations and ethical considerations. By demystifying, we empower individuals and businesses to harness the power of data-driven insights, unlocking new possibilities and driving innovation forward. Whether you’re a seasoned data scientist or a curious novice, exploring what AI can do for you is a journey of discovery, empowerment, and endless possibilities.

Understanding Machine Learning

Machine learning empowers computers to learn from experience, enabling them to perform tasks without being explicitly programmed for each step. It operates on the premise of algorithms that iteratively learn from data, identifying patterns and making informed decisions.

Unlike traditional programming, where explicit instructions are provided, machine learning systems adapt and evolve as they encounter new data. This adaptability lies at the heart of machine learning’s capabilities, enabling it to tackle complex problems and deliver insights that were previously unattainable.

Before turning to the two types of machine learning, viz. supervised and unsupervised learning, mention should be made of the primary programming language that is used in data science.

The program language Python, which is taught and used extensively in the Flatiron School Data Science Bootcamp program, has emerged as the de facto language for machine learning since it has simple syntax, an extensive ecosystem of libraries, and excellent community support and documentation. It is also robust and has scalability, and integrates with other data science tools and workflow such as Jupyter notebooks, Anaconda, R, SQL, SQL, and Apache Spark.

Supervised learning

Supervised learning involves training a model on labeled data, where inputs and corresponding outputs are provided. The model learns to map input data to the correct output during the training process. Common algorithms in supervised learning include linear regression, decision trees, support vector machines, and neural networks. Applications of supervised learning range from predicting stock prices and customer churn in businesses to medical diagnosis and image recognition in healthcare.

Unsupervised learning

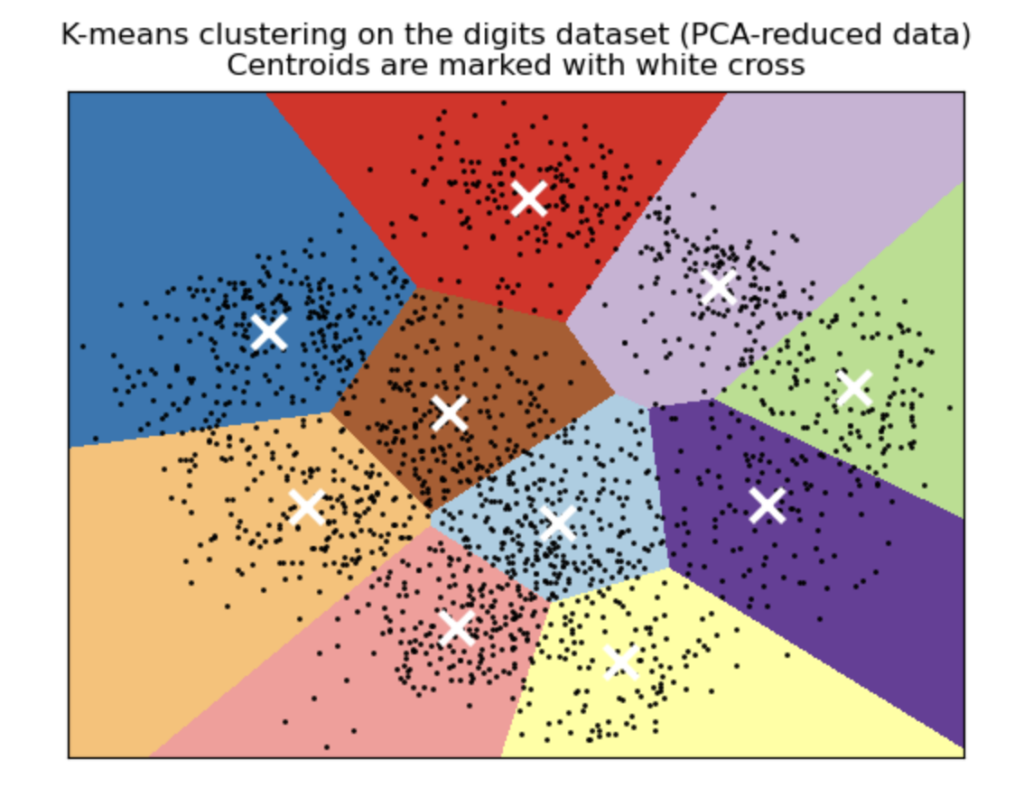

In unsupervised learning, the model is presented with unlabeled data and tasked with finding hidden patterns or structures within it. Unlike supervised learning, there are no predefined outputs, and the algorithm explores the data to identify inherent relationships. Clustering, dimensionality reduction, and association rule learning are common techniques in unsupervised learning. Real-world applications include customer segmentation, anomaly detection, and recommendation systems.

Machine learning algorithms

Machine learning algorithms serve as the backbone of data-driven decision-making. These algorithms encompass a diverse range of techniques tailored to specific tasks and data types. Some prominent algorithms include:

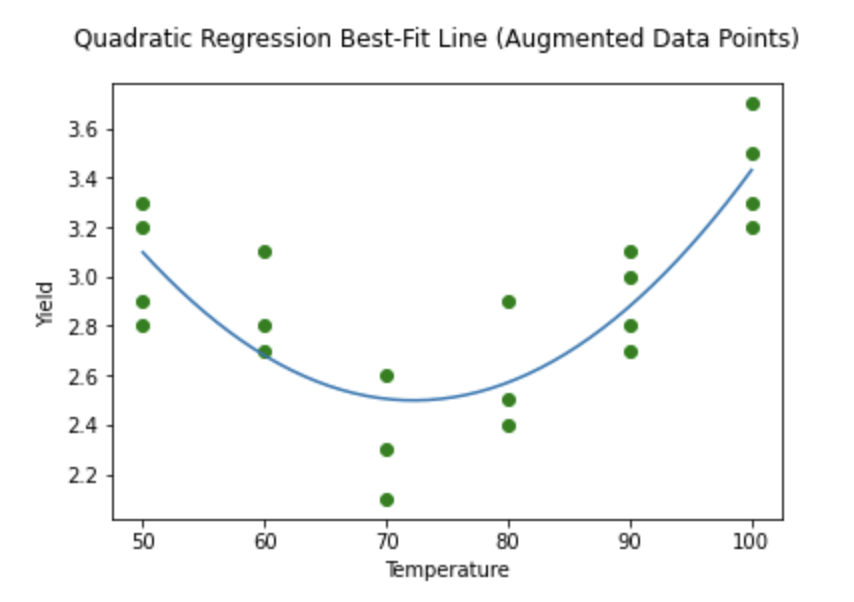

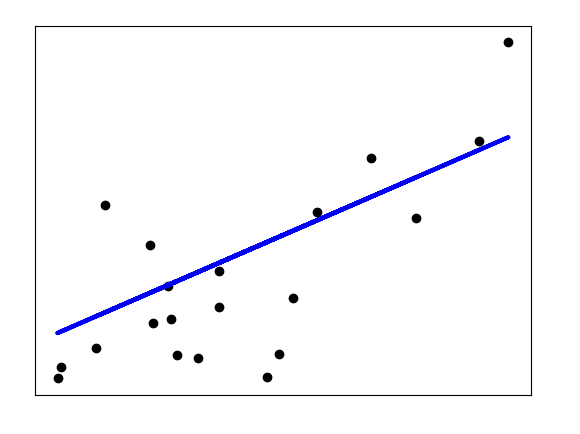

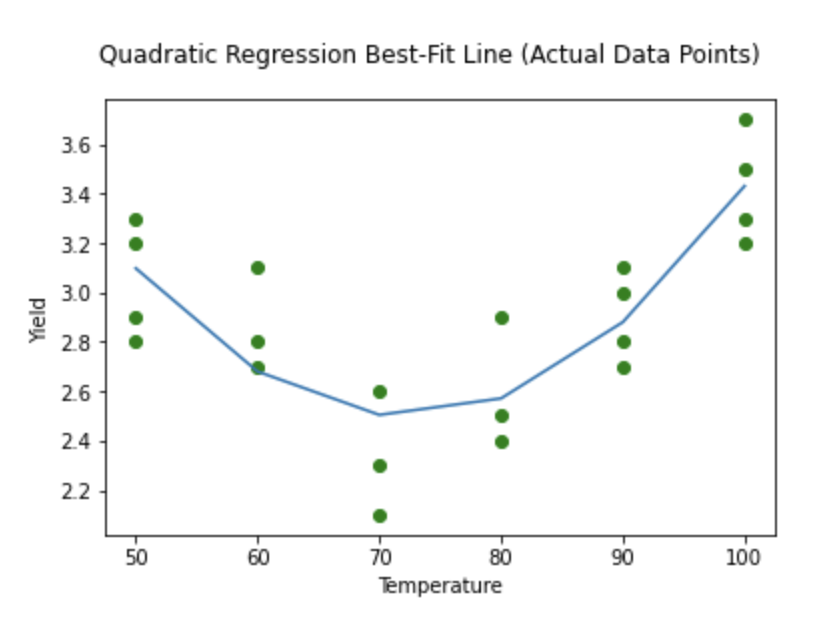

- Linear Regression: A simple yet powerful algorithm used for modeling the relationship between a dependent variable and one or more independent variables.

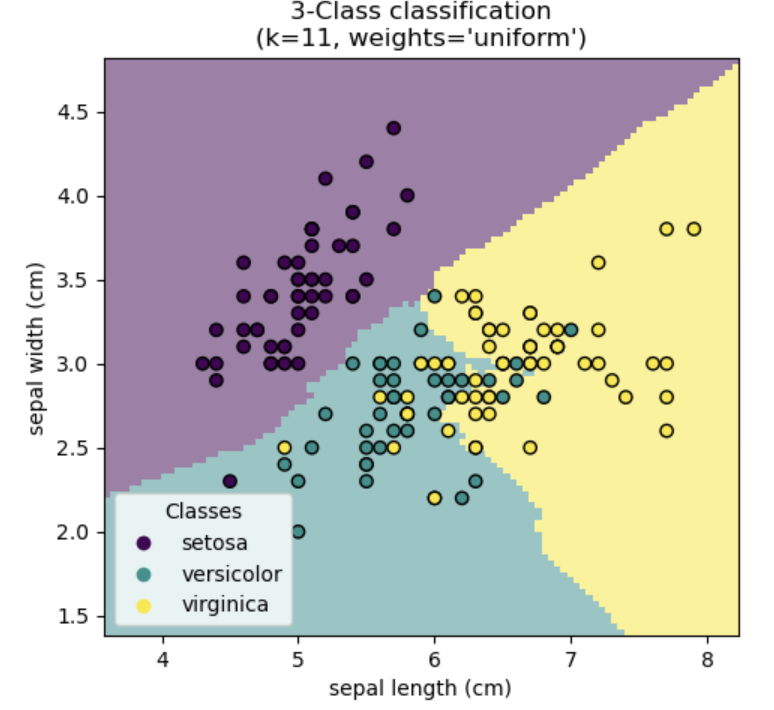

- Decision Trees: Hierarchical structures that recursively partition data based on features to make decisions. Decision trees are widely employed for classification and regression tasks.

- Support Vector Machines (SVM): A versatile algorithm used for both classification and regression tasks. SVM aims to find the optimal hyperplane that best separates data points into distinct classes.

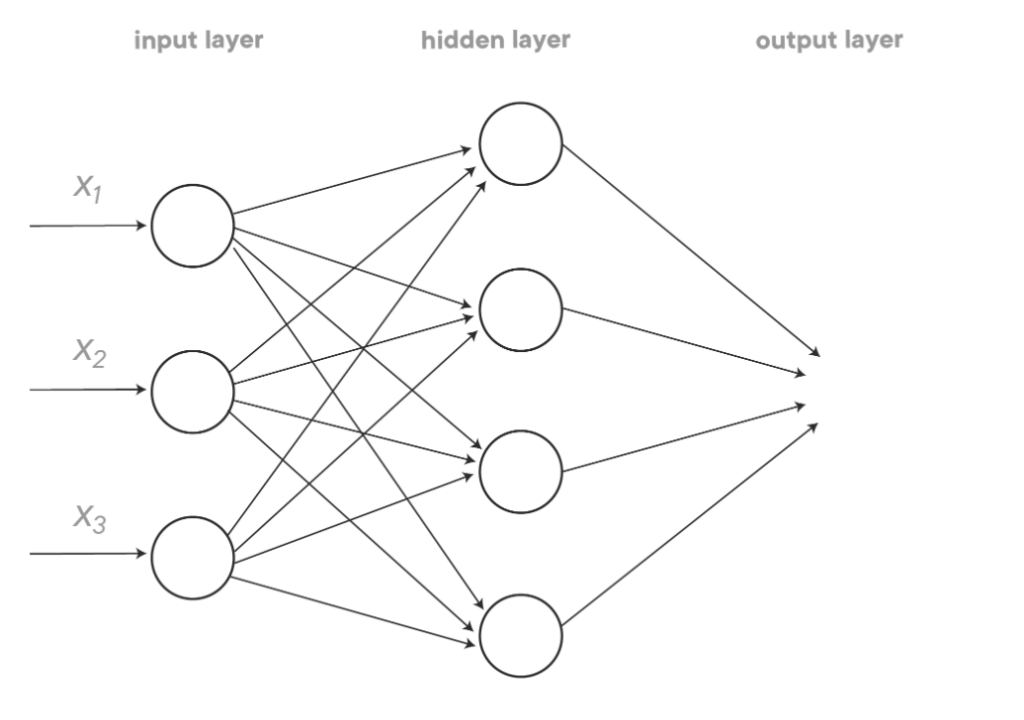

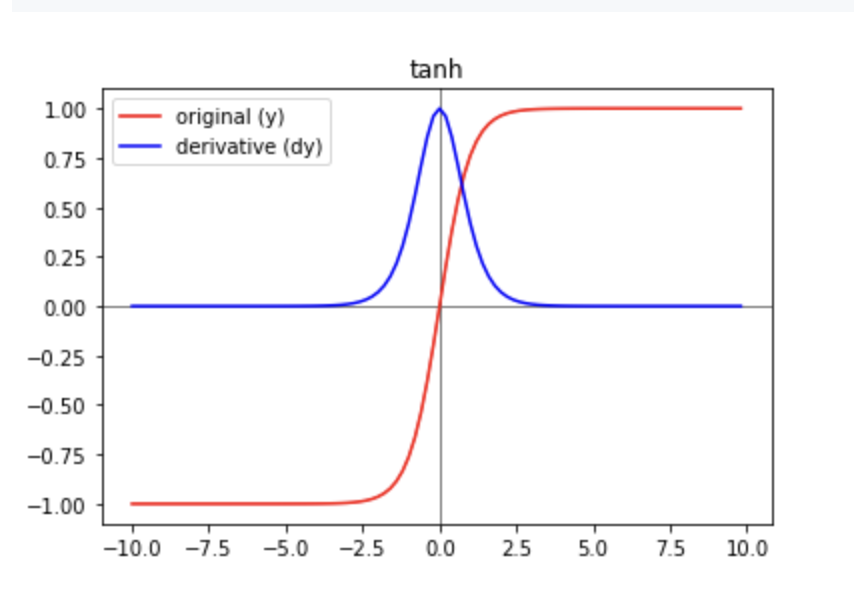

- Neural Networks: Inspired by the human brain, neural networks consist of interconnected nodes organized in layers. Deep neural networks, in particular, have gained prominence for their ability to handle complex data and tasks such as image recognition, natural language processing, and reinforcement learning.

It should be noted that all of these can be implemented within Python using very similar syntax.

Real-world Applications Across Industries

Machine learning’s transformative potential transcends boundaries, permeating various industries and sectors. Some notable applications include healthcare, financial services, retail and e-commerce, manufacturing, and transportation and logistics.

Healthcare

In healthcare, machine learning aids in medical diagnosis, drug discovery, personalized treatment plans, and predictive analytics for patient outcomes. Image analysis techniques enable early detection of diseases from medical scans, while natural language processing facilitates the extraction of insights from clinical notes and research papers.

Finance

In the finance sector, machine learning powers algorithmic trading, fraud detection, credit scoring, and risk management. Predictive models analyze market trends, identify anomalies in transactions, and assess the creditworthiness of borrowers, enabling informed decision-making and mitigating financial risks.

Retail and e-commerce

For retail and e-commerce, machine learning enhances customer experience through personalized recommendations, demand forecasting, and inventory management. Sentiment analysis extracts insights from customer reviews and social media interactions, guiding marketing strategies and product development efforts.

Manufacturing

In manufacturing, machine learning optimizes production processes, predicts equipment failures, and ensures quality control. Predictive maintenance algorithms analyze sensor data to anticipate machinery breakdowns, minimizing downtime and maximizing productivity.

Transportation and logistics

Lastly, for transportation and logistics, machine learning optimizes route planning, vehicle routing, and supply chain management. Predictive analytics anticipate demand fluctuations, enabling timely adjustments in inventory levels and distribution strategies.

Limitations and Responsible AI Use

While machine learning offers immense potential, it also presents ethical and societal challenges that demand careful consideration.

Bias and fairness

Machine learning models may perpetuate or amplify biases present in the training data, leading to unfair or discriminatory outcomes. It is imperative to mitigate bias by ensuring diverse and representative datasets and implementing fairness-aware algorithms.

Privacy concerns

Machine learning systems often rely on vast amounts of personal data, raising concerns about privacy infringement and data misuse. Robust privacy-preserving techniques such as differential privacy and federated learning are essential to safeguard sensitive information

Interpretability and transparency

Complex machine learning models, particularly deep neural networks, are often regarded as black boxes, making it challenging to interpret their decisions. Enhancing model interpretability and transparency fosters trust and accountability, enabling stakeholders to understand and scrutinize algorithmic outputs.

Security risks

Machine learning models are vulnerable to adversarial attacks, where malicious actors manipulate input data to deceive the model’s predictions. Robust defenses against adversarial attacks, such as adversarial training and input sanitization, are critical to ensuring the security of machine learning systems.

Conclusion

Now that machine learning has been demystified, we can see what AI can do for us. Machine learning epitomizes the convergence of data, algorithms, and computation, ushering in a new era of innovation and transformation across industries. From healthcare and finance to retail and manufacturing, its applications are ubiquitous, reshaping the way we perceive and interact with the world.

However, this technological prowess must be tempered with a commitment to responsible and ethical use, addressing concerns related to bias, privacy, transparency, and security. By embracing ethical principles and leveraging machine learning for societal good, we can harness its full potential to advance human well-being and prosperity in the digital age. Thus, by demystifying, we unveil a world of possibilities where AI becomes not just a buzzword, but a tangible tool for enhancing productivity, efficiency, and innovation.

Flatiron School Teaches Machine Learning

Our Data Science Bootcamp offers education in fundamental and advanced machine learning topics. Students gaining hands-on AI skills to prep them for high-paying careers in fast-growing fields like AI engineering and data analysis. Download the bootcamp syllabus to learn more about what you’ll learn. If you would like to learn more about financing, including flexible payment options and scholarships, schedule a 10-minute call with our Admissions team.