Data Science

Alumni Stories

Kendall McNeil: From Project Management to Data Science

"When I found Flatiron School, I was excited about the opportunity to level up my coding skills and gain a deeper understanding of machine learning and AI."

Tech Trends

Intro to Predictive Modeling: A Guide to Building Your First Machine Learning Model

Predictive modeling in data science involves using statistical algorithms and machine learning techniques to build models that predict future outcomes or behaviors based on historical data. It encompasses various steps, including data preprocessing, feature selection, model training, evaluation, and deployment.

Tech Trends

Mapping Camping Locations for the 2024 Total Solar Eclipse

Find camping locations within the path of totality of the 2024 solar eclipse by viewing our data visualizations, which merge eclipse and parks geo datasets.

Tech Trends

Introduction to Natural Language Processing (NLP) in Data Science

Natural Language Processing (NLP) encompasses a variety of techniques designed to enable computers to understand and process human languages. In this post you’ll learn about NLP applications like text classification and sentiment analysis, plus NLP techniques like tokenization and stemming.

Artificial Intelligence

Enhancing Your Tech Career with Remote Collaboration Skills

Learning to effectively collaborate with co-workers in a remote work setting is a critical skill to hone. Read on to find out how Flatiron’s AI-geared hackathon is helping the school’s graduates sharpen this skill—plus many others.

Artificial Intelligence

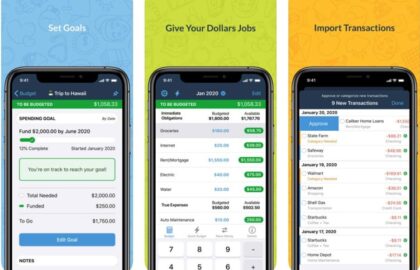

The 8 Things People Want Most from an AI Personal Finance Platform

Smooth bank integrations and rock-solid security are just two things people want most from an AI personal finance platform. Read on to learn the other six, and find out how Flatiron’s Hackonomics hackathon teams will incorporate all eight into their Money Magnet final projects.

Tech Trends

Decoding Data Jargon: Your Key to Understanding Data Science Terms

You should only use data science terms such as mean, median, standard deviation, correlation, and hypothesis testing if you are confident in being able to explain them. This post walks readers through explanations of some of the most common data science terms.

Browse by Category

- All Categories

- Admissions

- Alumni Stories

- Announcements

- Artificial Intelligence

- Career Advice

- Cybersecurity Engineering

- Data Science

- Denver Campus

- Diversity In Tech

- Enterprise

- Flatiron School

- Hackathon

- How To

- NYC Campus

- Online Campus

- Partnerships

- Software Engineering

- Staff / Coach Features

- Tech Trends

- UX / UI Product Design

- Women In Tech