Starting a journey in data science can feel like navigating a vast, uncharted territory. With so much data available across every industry, from healthcare to finance, how do professionals transform raw information into valuable insights? The answer lies in having a structured, reliable process. One of the most trusted frameworks in the industry is CRISP-DM.

CRISP-DM provides a roadmap for data science projects, ensuring that every step, from understanding the business need to deploying a solution, is clear, organized, and effective. By following this lifecycle, you can turn complex problems into actionable results and build a strong foundation for your data science career.

What is CRISP-DM?

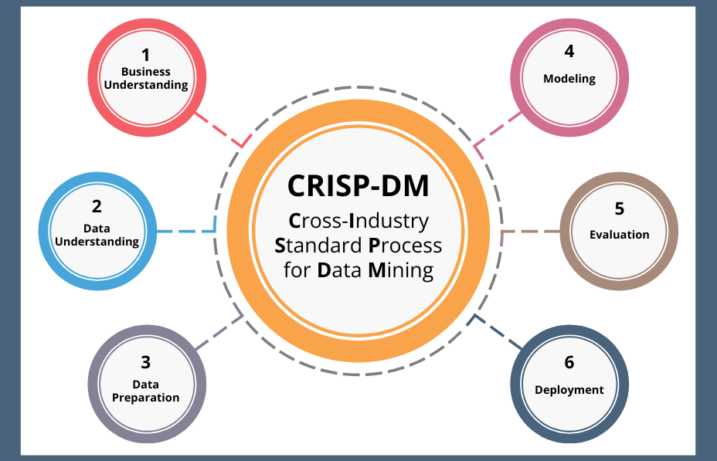

CRISP-DM stands for Cross-Industry Standard Process for Data Mining. Developed in the 1990s, it emerged from the need for a standardized way to approach data projects. As organizations began to realize the power of data, they needed a common language and methodology to guide their efforts, manage teams, and communicate with stakeholders.

CRISP-DM is a flexible, six-phase lifecycle that guides data professionals from the initial problem to the final solution. It’s not a rigid set of rules but a cyclical and iterative model, meaning you can move back and forth between phases as you learn more about the data and the business problem. This adaptability makes it a powerful tool for solving real-world challenges.

Why a Standardized Process Matters

Imagine trying to build a house without a blueprint. You might have all the right materials, but without a plan, the process would be chaotic and the final structure unstable. CRISP-DM acts as that blueprint for data science projects.

It helps professionals:

- Explore relationships to find opportunities.

- Quantify risks and rewards associated with different strategies.

- Identify potential hazards and sources of inefficiency.

- Warn stakeholders about future challenges, like a key supplier going out of business.

By providing structure, CRISP-DM allows data teams to manage complex projects, keep stakeholders informed, and ensure that the final output delivers real value.

The Six Phases of the CRISP-DM Lifecycle

The CRISP-DM model is broken down into six distinct yet interconnected phases. The process is cyclical, often returning to the beginning as new insights lead to new questions.

1. Business Understanding

Every data project begins with a question. The Business Understanding phase is dedicated to defining the problem you want to solve. This involves close collaboration with stakeholders, subject matter experts (SMEs), and IT professionals to get everyone on the same page.

Key activities in this phase include:

- Defining the objective: What are you trying to accomplish? Are you predicting a category (e.g., fraudulent vs. legitimate transaction), a value (e.g., stock price), or identifying patterns (e.g., customer segments)?

- Asking the right questions: Decompose a complex problem into simpler, general ones. For example, a vague goal like “improve marketing” can be broken down into “which customer groups respond best to our advertising?”

- Gaining preliminary data access: Get an initial look at the data that might help solve the problem.

This phase is iterative. As you gain a better understanding of the data in the next phase, you will often circle back to refine the business problem.

2. Data Understanding

Once you have a business problem, you need to understand the data you have to work with. This phase is about exploring your dataset to assess its quality, identify features, and get a feel for its characteristics.

A critical concept here is that the map is not the territory. Your data is a representation of reality, not reality itself. It can be outdated, incomplete, or contain errors from bad sensors or manual entry mistakes. Always approach your data with healthy skepticism.

Activities in this phase include:

- Initial Exploratory Data Analysis (EDA): Use basic visualizations and statistics to answer simple questions and orient yourself.

- Descriptive Statistics: Calculate the mean, median, mode, and value counts to understand the central tendencies and distribution of your data.

- Identifying Data Types: Determine if your data is nominal (labels like colors), ordinal (ranks like 1st, 2nd), integer (like number of transactions), or ratio (values with a true zero, like Kelvin). Understanding this tells you what mathematical operations are valid.

3. Data Preparation

Data preparation is where most data scientists spend a significant amount of their time. Raw data is rarely ready for modeling. This phase involves cleaning, transforming, and structuring your data into a usable format.

Fundamental steps include:

- Environment Setup: Choose your tools, such as a Jupyter Notebook, Google Colab, or a code editor like VS Code.

- Importing Libraries: Load essential Python libraries like pandas for data manipulation, Matplotlib and Seaborn for visualization, and NumPy for numerical operations.

- Handling Missing Values and Outliers: Decide whether to remove, replace, or impute null values. Investigate outliers to determine if they are legitimate data points or errors.

- Getting Data into Computable Form: Organize your data into a structure like a pandas DataFrame.

This phase is also iterative with the next one. As you begin modeling, you’ll often discover new data preparation steps you need to take.

4. Modeling

With prepared data, you can now build and train models to find patterns and make predictions. This phase is about selecting the right algorithms and techniques to address your business problem.

Key steps in modeling are:

- Establishing a Baseline: Create a simple model to get initial metrics. This baseline becomes the benchmark against which you can measure improvement.

- Selecting an Algorithm: Choose a model based on your goal (e.g., linear regression for predicting a value, classification algorithms for predicting a category).

- Training and Refining: Train your model on the data and refine its parameters. You may need to add or remove variables to improve performance.

- Comparing Models: Test multiple models and compare their metrics to find the one that performs best.

It’s okay if your first model is terrible! The goal isn’t to achieve perfection on the first try but to build a starting point for improvement.

5. Evaluation

Before deploying a model, you must evaluate its performance to ensure it is accurate, reliable, and addresses the business objective. This phase is about validating your work with rigorous metrics.

Two common metrics for evaluation include:

- R-squared (R²): This tells you how much of the variance in the data your model can explain. A score closer to 1.0 is better, but even a score of 0.3 or 0.4 can be very useful depending on the context.

- Root Mean Square Error (RMSE): This measures the average error of your model’s predictions in the original units (e.g., dollars, degrees). A lower RMSE is better.

These numbers are most useful for comparing different models. A higher R² score and a lower RMSE generally indicate a better model.

For classification problems, evaluation may instead involve metrics like accuracy, precision, recall, or ROC-AUC.

6. Deployment

The final phase is deployment. This is where you present your findings or integrate your model into a live system. Deployment can take many forms:

- A non-technical presentation to stakeholders summarizing key insights.

- A live model built on a cloud platform like AWS or Google Cloud that employees can access.

- A cyclical reporting process, such as a cybersecurity system that constantly monitors for threats and anomalies.

Often, the insights gained from one project will lead directly back to the Business Understanding phase for the next one, making the entire process a continuous loop of improvement.

Your First CRISP-DM Project: A Checklist

Ready to apply this framework to your own work? Here’s a simple checklist to guide you through your first data science project.

Business Understanding:

- What specific, measurable question are you trying to answer?

- Who are the stakeholders, and what do they need to know?

Data Understanding:

- Have you visually inspected the raw data? Just scroll through it!

- What are the key features and their data types?

- Have you run descriptive statistics (counts, mean, median)?

Data Preparation:

- Have you set up your environment and imported necessary libraries (pandas, Matplotlib)?

- How will you handle missing values and outliers?

- Is your data in a clean, computable format (like a DataFrame)?

Modeling:

- What is your baseline model?

- What algorithm(s) are you testing?

- Have you documented your model’s performance?

Evaluation:

- What do your R² and RMSE scores tell you about your model?

- How does your best model compare to your baseline?

- Does the model’s performance meet the business need?

Deployment:

- How will you present your findings or solution?

- What are the next steps or recommendations based on your work?

By following a structured process like CRISP-DM, you can build confidence, deliver meaningful results, and take a major step forward in your data science career.

Ready to go deeper?

If you want to dive deeper into CRISP-DM, apply to Flatiron School’s Data Science Bootcamp. Our curriculum teaches students how to think through an entire project from start to finish using industry-standard workflows like CRISP-DM, bringing order to complex work and preparing you to deliver real-world impact.

Frequently Asked Questions

How does CRISP-DM apply to GenAI and large language model projects?

CRISP-DM can be used for GenAI/LLM projects by following the same structure: define the business use case, gather and prepare prompts or training data, train or fine-tune models, evaluate outcomes using task-specific metrics, and deploy with proper monitoring and guardrails.

What tools are best for each phase of CRISP-DM?

In the Business and Data Understanding phase, tools like Python, SQL, and business intelligence platforms such as Tableau, Power BI, and data catalogs are often used to explore data and uncover insights that align with business goals. During Data Preparation, libraries and frameworks like pandas, dbt, Spark, and Polars help clean, transform, and structure data for analysis. For the Modeling phase, machine learning tools such as scikit-learn, AutoML platforms, TensorFlow, and PyTorch enable teams to build and train predictive models. In the Evaluation phase, experiment tracking and visualization tools like MLflow, Weights & Biases, and custom dashboards are used to assess model performance and reliability. Finally, in the Deployment phase, technologies like Docker, FastAPI, Vertex AI, AWS SageMaker, and Azure ML streamline model integration, scalability, and real-world application.

How should I document a CRISP-DM project?

Summarize the business objective, outline data sources, detail preparation steps, describe modeling and evaluation methods, report results clearly, and provide deployment instructions and recommendations for ongoing monitoring.

What are some common pitfalls in CRISP-DM projects?

Common pitfalls include vague problem statements, overlooking data quality issues, skipping proper evaluation, overfitting models, ignoring ethical or privacy concerns, and failing to align with stakeholder needs.